MATLAB

A full-featured development platform for math and science

MATLAB requires an environment module

In order to use MATLAB, you must first load the appropriate environment module:

module load matlabThis application is available in Open OnDemand.

MATLAB is a powerful scripting language and computational environment. It is designed for numerical computing, visualization and high-level programming and simulations. MATLAB also has parallel processing capabilities.

Using MATLAB on the HPC#

Do not run MATLAB on the Login nodes

The MATLAB graphical user interface (GUI) depends on the Java Virtual Machine in order to start and is very memory-intensive. Due to the limited resources available on the Login Nodes, you will encounter sluggish performance, hard-to-diagnose issues, and general problems.

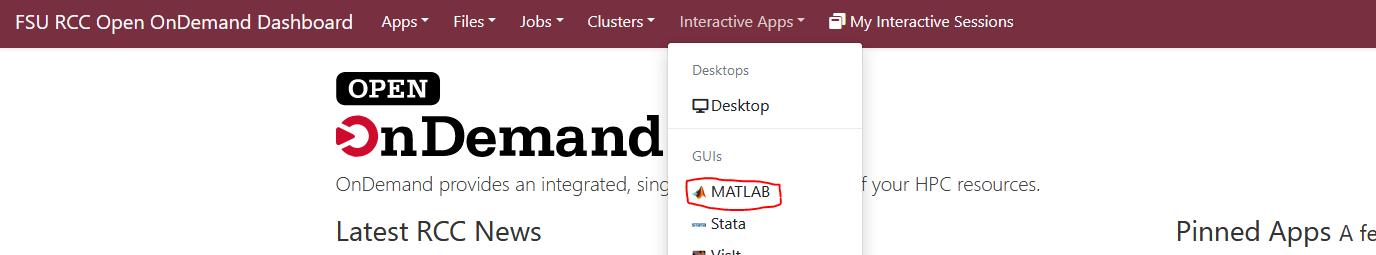

Instead, use our HPC web portal, Open OnDemand to start a graphical interactive MATLAB session.

Limited License Notice

We maintain a limited number of licenses for MATLAB at the RCC. Sometimes, all available licenses are in-use by other users, and you must wait to checkout a license.

Starting an interactive MATLAB session#

Use our HPC web portal, Open OnDemand to start a graphical interactive MATLAB session.

Enter the parameters for your job:

-

If you intend to use MATLAB for four hours or less, we recommend the backfill partition, or if you have purchased compute resources on the HPC, use your owner partition.

-

For optimal performance during the MATLAB session, we recommend allocating a minimum of 16 CPU cores and 16G of memory.

-

MATLAB Version: Specify the preferred MATLAB Version.

-

Extra Module (Optional): This option allows you to select a specific version of an additional environment module if your project relies on add-ons or MEX files requiring a particular compiler version for compatibility.

Parallel usage of MATLAB on the HPC#

MATLAB includes a powerful Parallel Computing Toolbox that is available by default on the HPC system. To learn how to use it, follow the tutorials and documentation provided MATLAB (we recommend using Open OnDemand for this):

- Getting Started with Parallel Computing

- Parallel Computing Fundamentals

- Parallel FOR Loops in MATLAB

- Parallel Computing Toolbox Examples

Using pmode interactively#

Below is an example of how to invoke interactive pmode with four workers:

This will open a Parallel Command Workflow (PCW). The works will then receive commands entered in PCW (at the P>> prompt),

process them, and send the command output back to the PCW. You can transfer variables between the MATLAB client and the workers.

For example, to copy the variable x to xc in the "lab2" client:

Similarly, to copy the variable xc on the local cline to the variable on "lab2", use:

You can perform plotting and other operations from inside the PCW.

You can also distribute values among workers. FOr example, to distribute the array x among workers, use:

Use the numlabs, labindex, labSend, labreceive, labProbe, labBroadcast, labBarrier functions similar to

MPI commands for parallelizing. Refer to the MATLAB Manual

for a full list of commands.

Using parpool interactively#

The syntax for parpool is as follows:

...where poolsize, profile, and cluster are respectively the size of the MATLAB pool of workers and the profile or

the cluster you created. The last line creates a handle named ph for the pool.

parpool enables the full functionality of the parallel language features (parfor and spmd) in MATLAB by creating

a special job on a pool of workers and connecting the MATLAB client to the parallel pool.

The following example creates a pool of four workers, and runs a parfor-loop using the pool:

The following example creates a pool of 4 workers, and runs a simple spmd code block using this pool:

Note: You cannot simultaneously run more than one interactive `parpool session. You must delete your current

parpool session before starting a new one. To delete the current session, use:

...where gcp utility returns the current pool, and ph is the handle of the pool.

Non-interactive job submission#

The following examples demonstrate how to submit MATLAB jobs to the HPC Job Scheduler. You should already be familiar with how to submit jobs.

A note about MATLAB 2017a

You may notice several warning messages related to Java in the output files or on the terminal when running non-interactive jobs using MATLAB 2017a. While the cause of these warnings is not clear, they do not appear to cause any errors in the job runs themselves. These warnings can be safely ignored.

Single core jobs#

The following example is a sample submit script (test1.sh) to run the MATLAB program test1.m, which uses a single core.

Note

test1.m should be a function, not a script. This can be as simple as enclosing your script in

a function.

Note

The Parallel Computing Toolkit (PCT) cannot be used within your function. You cannot use parfor or any other

command that utilizes more than one core.

Multicore jobs#

You can submit MATLAB jobs to run on multiple cores by adding code in your MATLAB script that dynamically detects

the Slurm --ntasks/-n parameter from your Slum submit script:

However, in the case that you need large numbers of cores or large amounts of memory (i.e. 32+ cores or 64 GB+ memory), then, due to the space/core requirements Java Virtual Machine, which MATLAB uses for it's parallel computing toolbox, you'll need to make sure that your script only starts half the number of workers relative to the number of cores you request. This will prevent the parallel pool from crashing due to the JVM running out of memory and cores for it to create processes.

This way, your code can take advantage of MATLAB parallel computing constructs such as parfor and others. When your

job completes, the last line will delete the parallel pool of workers before exiting.

Below is a typical multicore Slurm submit script for non-interactive MATLAB jobs:

A word on multiple node jobs

Multiple node jobs require the MATLAB Parallel Server, which is currently unavailable on the HPC. If your job needs more resources than are available on a single compute node, please let us know.

Using the MATLAB compiler#

If you need to perform a large number of simultaneous MATLAB workers, we recommend that you compile your code into a binary executable. This allows you to write and test your code in the MATLAB IDE, and then compile it to C when you are ready to run a production job. Using this method also allows you to avoid license restrictions for MATLAB.

The MATLAB compiler command is mcc and it is documented thoroughly in the official MATLAB documentation.

Example single-thread compilation#

To compile the non-parallel code, test1.m, use the following command:

This will produce the following output files: run_test1.sh (run script) and test1 (binary).

Use the run_test1.sh script to load the environment and run the test1 binary:

Note that input arguments will be interpreted as string values, so any code that utilizes these arguments must convert these strings to the correct data type.

One concern with this method is that the generated binary output will contain all the toolboxes available in your

MATLAB environment which may result in a large binary file. To avoid this, use the -N compiler flag. The -N compiler

flag will remove all except essential toolboxes. You can then handpick which specific toolboxes or .m files you need using the

-a option.

Parallel compilation with MATLAB#

To compile a MATLAB program with the Parallel Computing Toolbox in MATLAB, use the following syntax:

To run the resulting output from the above command, you must provide a parallel profile:

This line can be run in any Slurm submit script without any modification, and no MATLAB licenses will be used.

For more information is available in the MATLAB Help Page on the subject.

Using MATLAB with GPUs#

MATLAB is capable of using GPUs to accelerate calculations. Most built-in functions have GPU alternatives.

To take advantage of GPUs, you will need to submit your jobs to Slurm accounts with GPU nodes. A list of general access Slurm accounts that have GPU nodes in them is documented on our general GPU information page. Alternatively, if you have access to purchased compute resources on our cluster, use your owner-based Slurm Account.

GPU example#

You can try the following example, which is a mandelbrot program, f_mandelbrot:

Run the program on a GPU node, and display the results using the following commands:

The arrays x and y are generated on the GPU, utilizing its massively parallel architecture, which also handles the remainder of the computations that involve these arrays.