Submitting GPU jobs

The HPC supports GPU jobs. The HPC includes an increasing number of compute nodes equipped with GPU hardware.

GPU resources available on the HPC#

GPU resources on the HPC are available in certain access Slurm accounts (partitions):

| GPU Model | Number | Slurm accounts/Partitions |

|---|---|---|

| NVIDIA GeForce GTX 1080 Ti | 28 (4x per node) | backfill2 (4 hour time limit; see below) |

| NVIDIA RTX A4500 Ada Generation | 40 (2x per node) | backfill2 (4 hour time limit; see below); gpu_q, and owner accounts |

| NVIDIA RTX A4000 | 16 (2x per node x 7 & 4x per node x 2) | backfill2 (4 hour time limit; see below); gpu_q, and owner accounts |

| NVIDIA RTX A4500 | 24 (2x per node x 12) | backfill2 (4 hour time limit; see below); gpu_q, and owner accounts |

| NVIDIA H100 Tensor Core GPU | 4 (2x per node x 2) | Limited access for pilot programs; Request access |

In addition, if your department, lab, or group has purchased GPU resources, they will be available on your owner-based Slurm account.

Free GPU jobs longer than four hours#

If you want to run jobs that are longer than four hours in our general access queues, we are accepting requests on a case-by-case basis. Contact us to request access.

If you have already been approved and enrolled in the genacc_gpu_users group, submit your longer running GPU jobs to the gpu_q:

If your research group has purchased dedicated GPU resources on our system, then there is no need to request access. If you submit your job to your owner-based queue, you will be able to run GPU jobs for longer than four hours.

Submitting GPU Jobs#

Via Open OnDemand#

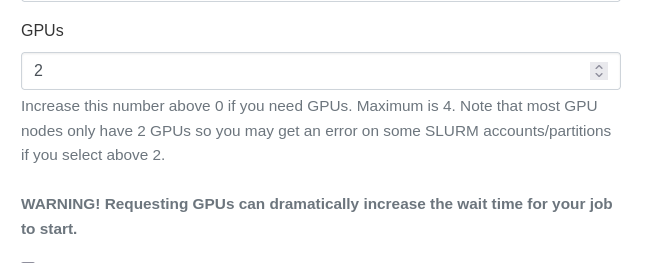

You can submit graphical jobs via Open OnDemand to run on GPU nodes. Note that this may increase your job wait time in the queue. Follow the instructions for submitting a job, and specify 1 or more GPUs in the "GPUs" field:

Note

Some interactive apps may not have this field. If you encounter this, please let us know.

Via the CLI#

Tip

If you have not yet submitted a job to the HPC cluster, please read our tutorial first.

If you wish to submit a job to node(s) that have GPUs, add the following line to your submit script:

Nodes contain two to four GPU cards. Specify the number of GPU cards per node you wish to use after the --gres=gpus: directive.

For example, if your job requires four GPU cards, specify 4:

Full Example Submit Script#

The following HPC job will run on a GPU node and print information about the available GPU cards:

Your job output should look something like this:

Using specific GPU models#

You can view the specific GPU models in the cluster with the sinfo command:

Note

GPUs are only available in specific Slurm accounts/queues.

In your submit script, use the following gres line: gpu:a4500:[#]. For example:

More information#

For more information and examples, refer to our CUDA software documentation