Desmond

Simulation software for molecular dynamics

Desmond requires an environment module

In order to use Desmond, you must first load the appropriate environment module:

module load desmondDesmond is a software developed by D. E. Shaw Research to perform molecular dynamics simulations of biological systems on conventional commodity clusters. Desmond was installed on the FSU HPC cluster as a component of the Schrodinger software suite. The Schrodinger suite also include Maestro, a visualization tool for molecular dynamics. The Schrodinger suite is available on the shared HPC storage system at the following path:

Using the Schrodinger Suite for the Molecular Dynamics Simulation Process#

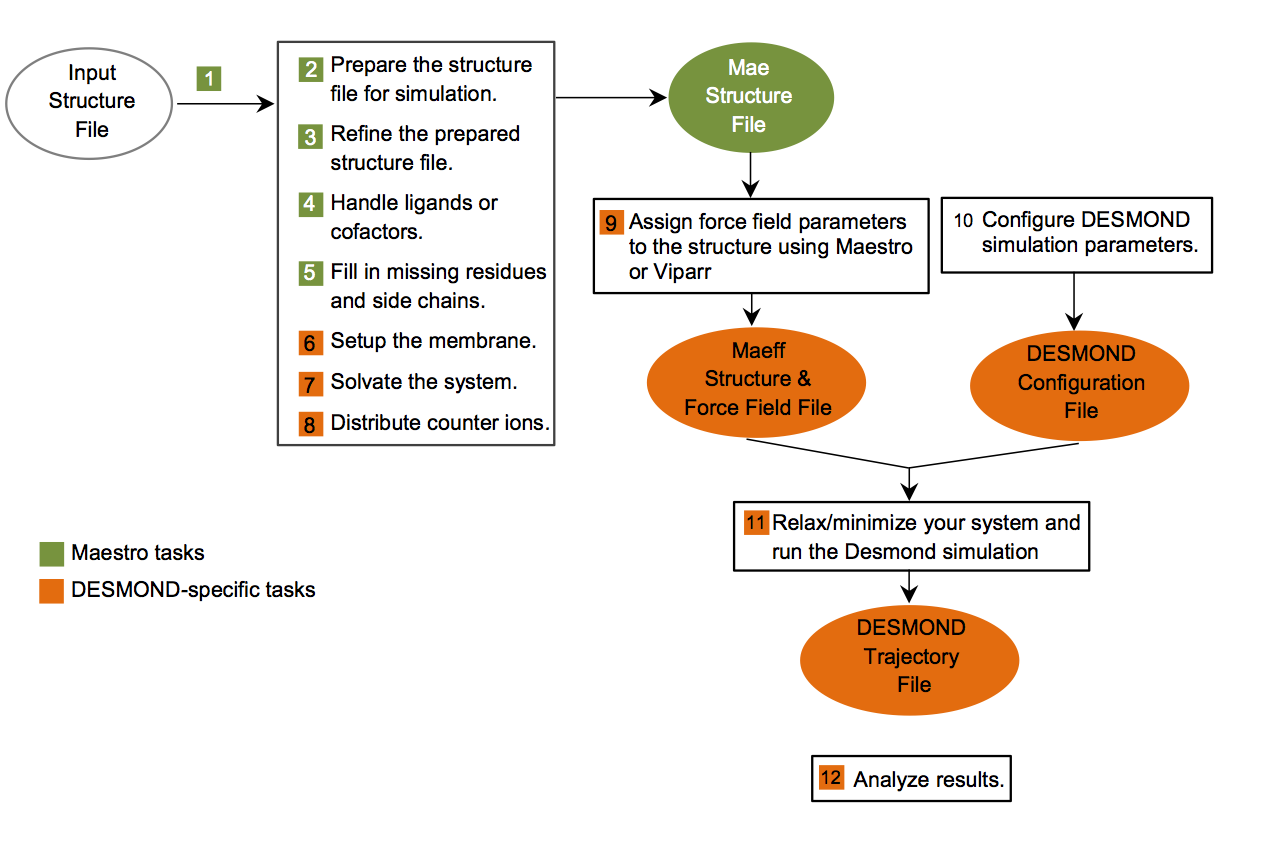

The procedure for running a molecular dynamics simulation using Desmond and Maestro can be summarized in the following figure:

In particular, the original structure file imported from the protein database (PDB) needs to be prepared/preprocessed by Maestro to produce the structure file (with force field), which is used as the input of the Desmond simulation. The configuration file contains the simulation parameters such as the global cell, the force field, constraints (if there are any), and the integrator.

Warning

It is not advisable to run GUI software on the HPC login nodes. We suggest you to install Maestro to your personal computer and prepare the structure file on your laptop/desktop, before running the Desmond simulation (the CPU-intensive process) on the HPC cluster.

Command Line Syntax for Desmond#

The syntax for Desmond is as follows:

The possible [Job_Options] include the following commands:

| Syntax | Command Meaning |

|---|---|

-h |

print help message. |

-v |

print version information and exit. |

-WAIT |

don't exit until job completes. |

-p,-NPROC |

number of processors to be used (default is 1). |

-JOBNAME name |

the name of this job. |

-gpu |

run GPU version. |

-jin filename |

files or directories to be transfered to the compute node. |

-jout filename |

files to be copied back to the submit node. |

-dryrun backend_cfg |

generate backend config file only. |

The possible [Backend_Options] include the following commands:

| Syntax | Command Meaning |

|---|---|

-comm plugin |

use communication plugin (serial or mli) |

-c config_file |

parameter file for simulation. |

-tpp n |

number of threads per processor. |

-dp |

run double precision version (single precision by default). |

-noopt |

do not optimize parameters automatically. |

-overwrite |

overwrite trajectory. |

-profile |

enable backend profiling. |

The possible [The Backend_Arguments] include the following commands:

| Syntax | Command Meaning |

|---|---|

-in x.cms |

the structure file |

-restore checkpoint |

a check point file for resuming a simulation |

(Run the desmond -h command for details.)

As an example, to run the Desmond simulation with input structure file x.cms and configuration file y.cfg

on a single machine, you would write out the following input:

where SCHRODINGER is the environmental variable (path) to the Schrodinger software installation.

Command Line Options for Schrodinger Job Control Facility#

Besides command line options specific to desmond, there are important command line options directly recognized by the Schrodinger Job Control Facility. Here are a few important ones (These options tells the job control facility to run job on a specified host, or submit job to a queue.)

Here, host is the value of a name entry (not the host entry) in the schrodinger.hosts file

(see discussion in the following), or the actual address of a host, and n (n_1, n_2, ... n_k) is the number of

cores to the host. When specifying more than one host, use spaces to separate them and enclose them with quotes.

This option passes arguments to the queue manager. These arguments are appended to those specified by the qargs

settings in the hosts file schrodinger.hosts.

This option specifies the scratch directory for the job. The job directory is created as a

subdirectory of the scratch directory. We suggest you to use $HOME/scratch or $HOME/_tmp.

There are some options to see the configured runtime for Desmond. For example,

This option shows the section of the schrodinger.hosts file that will be used for this job provided the -Host host

option points to a section of the hosts file.

Remark: Command-line options always take precedence over the corresponding environment variable.

Running Desmond Simulation on the HPC Cluster#

Molecular dynamics simulations are CPU-intensive. A Desmond simulation can run on the HPC cluster in two ways:

- Via the Slurm job submit script (recommended)

- Through the Schrodinger's Job Control Facility

Submitting Desmond Jobs Using a Slurm Script#

Below is an example Slurm submit script:

where x.cms and y.cfg are respectively the structure file and simulation parameter file.

Submitting jobs Using the Schrodinger Job Control Facility#

The Job Control Facility obtains information about the hosts on which it will run jobs (and other information needed

by the queue) from the hosts file, schrodinger.hosts, and will submit/monitor the job for you. The default hosts file

is located in:

An example entry of the schrodinger.hosts is as follows:

In this example:

genacc_qis the name of the entry (each entry is one job submission scenario)submitis the name of the host (the login node of the HPC cluster)slurmis the queuing softwareqargscontains the command line options of qsub (or sbatch for Slurm). In thegenacc_qqueue, the wall clock limit is 14 daystmpdiris the scratch space for Desmond application (you can use for example$HOME/scratchfor your ownschrodinger.hostsfile).

To submit a parallel job using the above configuration, input the following:

The -HOST genacc_q flag above will tell the Schrodinger Job Monitoring Facility to use the entry with name genacc_q in

the schordinger.hosts file to create the job submit script. Consequently, the simulation will be submitted to the queue

genacc_q asking for 2 nodes and 8 cores each).

To use your own hosts file, create a directory .schrodinger under your home directory, and copy the file below to it

and edit it:

You also need define the environmental variable SCHRODINGER_HOSTS to point to this file:

Monitoring Jobs Using the Schrodinger Job Control Facility#

Besides the job control commands provided by the job scheduler such as squeue, sbatch, etc., Desmond simulations can

be monitored by the Schrodinger Job Control Facility. The Job Control facility provides tools for monitoring and

controlling the jobs that it runs. Information about each job is kept in the user's job database. This database

is kept in the directory $HOME/.schrodinger/.jobdb.

A Desmond job can be manipulated and monitored by the Schrodinger utility jobcontrol as follows:

In the above, command is the command for the action you want to perform, and query defines the scope of the action

performed by the command.

The possible command options include:

| Syntax | Command Meaning |

|---|---|

-cancel |

Cancels a job that has been launched but not started |

-kill |

terminates a job immediately |

-list |

lists the JobID, job name and status |

-resume |

continues running a paused job |

-dump |

shows the complete job record |

The possible query options include:

| Syntax | Command Meaning |

|---|---|

all |

all jobs in your job database |

active |

all active jobs (not finished) |

finished |

all jobs finished, or a JobID. The JobID is a unique identifier consisting of the name of the submission host, a sequence number, and a hexadecimal timestamp, e.g., submit-0-a1b2c3d4. |

For example, to list all the jobs in your job database that finished successfully, enter:

To list just the job whose JobId is submit-0-a1b2c3d4, enter:

To list the complete database record for a job, enter the command: